The "A.G.I." category hinders rather than helps in thinking about technological change and human displacement.

"Programs building programs to build super-programs" is not how successful technologies get made and succeed. LLMs will be consequential but it will depend on actual products and their deployment.

Ezra Klein recently interviewed Ben Buchanan, international relations scholar and Special Adviser on AI to the Biden Administration, about what the recent developments in LLM models mean for the future of human jobs. Buchanan, as per his LinkedIn page, is currently an “Assistant Professor at Johns Hopkins University School of Advanced International Studies” and has written “three books on AI, cybersecurity, and national security.”

Buchanan hates the word “A.G.I.” (Artificial General Intelligence”) but uses it anyway to mean something like: new programs that would be able to do certain tasks in a human-like way. He is worried about it. As is Klein. And they are right. But the interview seems to conflate a lot of different things together.

First, Klein and Buchanan seem to have very different definitions of A.G.I. Klein is apparently thinking of new products like Open AI’s recent Deep Research (which, apparently, is really really good); but Buchanan (and Klein) spend most of the interview talking about the use of AI in adversarial activities like “intelligence analysis and cyberoperations.”

Second, it seems to me that some of the proponents of the view that A.G.I. will change everything have a somewhat naïve view of how technologies actually develop and succeed; a technology doesn’t just succeed because it’s “good”; it succeeds because companies build products for clients and users and people in turn take up those products because they fit in with what they want to do. The A.G.I. people seem to think that once some basic A.G.I. capability is generated, making products is just something that is “downstream” but the history of technology says otherwise.

Which leads to a third and related point. The worries over the implications of LLMs are definitely correct; they almost certainly will impact certain sectors (maybe a lot of them!) but which ones and how is going to depend on a lot more factors than how “intelligent” the underlying model is; for LLMs to displace jobs will involve creating good products built on LLMs and what constitutes a “good product” depends just as much on technology consumers as it does on technology builders. So, yes, we should be thinking about this and probably be worried about this but talking about “A.G.I.” does not really help matters.

So let’s dig into these three things.

What is A.G.I. and what are we worried about?

The biggest question is: what exactly are we worried about when we talk about A.G.I.? But to be more precise, since no one quite knows what A.G.I. is, what are we talking about when we worry about the long-term effects of programs that already exist in some form?

This is how Klein says in the introduction to the episode trying to define A.G.I.:

What they [the people building AI and worried about it] mean is that they have believed, for a long time, that we are on a path to creating transformational artificial intelligence capable of doing basically anything a human being could do behind a computer — but better. They thought it would take somewhere from five to 15 years to develop. But now they believe it’s coming in two to three years, during Donald Trump’s second term. [my emphasis]

The part in bold is worth noting because it is very specific and I assume, deliberately so. Klein does not say “transformational AI capable of doing anything a human could do”; he says “AI capable of doing basically anything a human could do behind a computer.” This is both quite broad but quite narrow, especially when compared to the piece on A.G.I. that Klein links to a sentence or two before. The AI developers and entrepreneurs quoted in that piece as not so circumspect; they think A.G.I. is AI that can do anything a human can do but their concerns range far and wide: many think that A.G.I. means we can do far better science in less time; others think that A.G.I. will be able to do tasks that lawyers and doctors do; still others are worried about war conducted by AI with AI generals and AI drones; some worry that AI will make bioweapons; and still others are worried that AI will power personas that will become our friends and alienate us from actual people.

When you compare these quite out-there scenarios, Klein is saying something very specific: we could have programs doing tasks that humans usually did sitting behind computers. What do people do sitting behind computers? They read, they write, they make charts, and in many of these tasks, they use computer programs. Now some of these tasks could be done not by a human working with a computer program but by a human delegating the whole task to the computer program. When the writer Timothy Lee tested out Open AI’s new “Deep Research” tool by giving it real-world tasks that he sourced from working professionals, he found that it did a pretty good job overall. An architect asked Deep Research to give him a “detailed building code checklist for a 100,000-square-foot educational building,” it produced a report that the architect deemed as “better than intern work, and meets the level of an experienced professional” and something that “would take six to eight hours or more to prepare” for a human being.

Presumably, a lot of tasks that involve writing reports or processing a lot of text are going to change in the next few years. We should be thinking a lot about this.

But the interview with Buchanan itself isn’t particularly concerned with these tasks. It is mostly concerned with the intricacies of war, espionage, and diplomacy.

Buchanan himself hates the term A.G.I. but this is how he ends up defining the term:

[Buchanan]: A canonical definition of A.G.I. is a system capable of doing almost any cognitive task a human can do. I don’t know that we’ll quite see that in the next four years or so, but I do think we’ll see something like that, where the breadth of the system is remarkable but also its depth, its capacity to, in some cases, exceed human capabilities, regardless of the cognitive discipline

[Klein]: Systems that can replace human beings in cognitively demanding jobs.

[Buchanan]: Yes, or key parts of cognitive jobs. Yes. [my emphasis]

This definition starts off much vaguer (a system that is capable of “depth” and has a “capacity” that “in some cases, exceed[s] human capabilities regardless of cognitive discipline”) but then ends up being something like a system that can do “cognitively demanding jobs” at the level of human beings.

The key here is of course which jobs and what is the definition of “cognitively demanding.” But the examples that Buchanan ends up giving in his interview aren’t actually about any of this; there is a lot of discussion about diplomacy, the US-China relationship, state-backed cyberoperations and hacking, espionage, and so on. Buchanan worries about the use of AI-based programs being able to do these things at a scale never seen so far and what that might lead to in terms of the geopolitical situation. Fair enough—and the interview is quite interesting on the two fronts of international relations and domestic regulation. But we learn little about what is supposedly the ticking time-bomb: the replacement of humans by machines for cognitively demanding jobs (there’s little bit of it at the end when Klein mentions marketers and coders as being impacted differently by AI).

Of course, this also begs the question: what does all of this have to do with so-called A.G.I.? Here, I think Buchanan makes one statement that, I believe, is key to his thinking and to the the thinking of others who fear A.G.I.:

I do think there are profound economic, military and intelligence capabilities that would be downstream of getting to A.G.I. or transformative A.I. And I do think it is fundamental for U.S. national security that we continue to lead in A.I. [my emphasis]

Buchanan and Klein both have a particular model of how technological development occurs, what I’ll call, for lack of a better word, the linear model. They think that there is an underlying mechanism (which they are calling A.G.I.) and this underlying mechanism, once discovered or invented, will just affect everything else that is “downstream.” So once we have A.G.I, whatever that is, which is more general, it leads to better programs in other, very specific things; so we have programs doing tasks related to law or marketing or journalism and so on.

The question is: is this the right way to think about technological change?

Is the underlying “science” all that is necessary to make successful technologies?

I think the history of technology shows that this is almost certainly not how technological change happens. Successful technologies—in the sense that these are widely adopted and/or commercialized—are not just downstream of “science” and they depend on a lot more than just the technical inventions.

I’ll give two examples here: Edison’s success at building an electricity grid to power lamps in individual homes and a much more small-scale example of doctors and pagers.

The venerable historian, Thomas Hughes, has described in rich detail how Edison was able to successfully build electricity grids. Edison was famous for using science to solve problems but Hughes points out that Edison’s science came from his unique grasp of the economics of electricity grid-building. As Hughes writes in his paper “The Electrification of America”:

Edison’s method of invention and development in the case of the electric light system was a blend of economics, … experimentation, and science.

The point here is that the science—the underlying principles—came at the end and were probably the least important. How so?

Consider the invention Edison is most famous for: creating a durable light bulb that works in an electrical system. To come up with this light bulb, he experimented with thousands of materials and types of filaments before he found the right material for the job. This is R&D at at its best and most grueling.

But the search for the filament was inspired by one of Edison’s main goals, a goal that was less about his skills as a scientist or inventor and more about his genius as a businessman and system-builder. Edison had decided that no consumer would pay more for electric lamps than they did for gas lamps. To make that possible, he knew that he would have to keep the cost of his lighting system low enough.

What were the costs? Hughes provides us with this cost estimate from one of Edison’s notebooks:

One of the biggest capital costs for Edison was the price of the copper conductors which, as you can see, is the highest number in that cost estimate (57k).

But there was another facet: when electricity goes through copper wires, some of it is lost as heat; this is essentially a loss and the goal of any electrical network should be to minimize this loss.

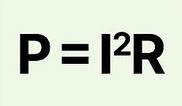

This heat loss is dependent on a few things: it’s dependent on the square of the current, it’s dependent on the length of the wire, it’s dependent on the material of the wire. And it’s inversely proportional to the cross-sectional area of the wire; i.e., the thicker the wire, the less the heat loss.

Edison had some options: he could make the wire thicker but that would increase the copper he would need to build the wires which would hugely increase his capital costs (again, the copper wires are the largest item).

If his copper wires were somewhat on the thinner side, then he would have to make sure that the current passing through it was somewhat low (see the formula).

How would that work? It was in trying to fix this problem that he had an inspiration: he thought of the newly articulated Ohm’s law. Ohm’s law says that for the same voltage, you can reduce the current by increasing the resistance of the circuit. What if, Edison thought, he used a high-resistance filament for the bulb? (This was a very different choice from other inventors who were trying low-resistance filaments.) That would increase the resistance of the circuit, decrease the current, which would reduce the heat dissipated and allow him to keep his copper wires reasonably thin.

So he searched high-and-dry for a filament that was both high-resistance, durable, and gave off light that was useful to people. And it worked: he was able to optimize all of his objectives: minimize heat losses, keep the cost low to consumers (roughly the same as gas lights), and produce good light.

Was Edison’s electricity grid successful and widely adopted? Undoubtedly. But not because Edison discovered some underlying science. It was because Edison was able to use his science to make a good product. But the goodness of his product depended on a lot more factors; he kept his capital costs low so that he could sell to consumers at a competitive price. Had he perhaps not been able to do so, it is possible that his electricity grids would have been unsuccessful and it would be someone else, not Edison, whom we would credit with the idea of electricity grids (which would also look different from his).

Successful products—i.e., commercialization—are not necessarily just downstream of some underlying technical invention. Whether a product succeeds has a lot to do with factors that are not about the underlying principle of the technology itself.

Here’s another more contemporary example: this Planet Money story about doctors and pagers. You might wonder: why do doctors in the emergency room still use the pager system when they wish to consult with specialists or consultants? Isn’t it better to just use text messaging which is faster, simpler, and more efficient? After all, the underlying invention—messaging via text—already exists.

One hospital decided to try this. They built their own electronic messaging system (call it a WhatsApp for the hospital) and deployed it and found that, after some initial success, the system stopped being used so much. In the beginning, the doctors realized that the electronic messaging system had capabilities that the pager system did not; they could actually send photos!

FOUNTAIN: But pretty soon, as the ER doctors and the doctors they were texting in other departments got used to the new app, it really started to prove itself. One of the real game changers was the doctors could now send photos back and forth.

GUO: Toph remembers treating a patient who came in with a really bad broken leg. Like, the bone was literally sticking out of the skin.

PEABODY: So I took a secure photo and texted it to the orthopedic surgeon and just put the room the patient was in, no other information, and I got a response immediately that said, be right there.

GUO: Oh, wow.

Wow is right. But there were rumblings that things were not quite right. The consulting doctors, especially, “the ones on the receiving end of all those texts and pages from the ER,” they started to complain about this new system. Why? It turned out that “this app that was supposed to be making communication between doctors easier - maybe it was actually making communication too easy.”

How so? The junior-most doctors in the ER now just had more opportunity to ask their consultant brethren for advice. Even if they knew what to do, why not just ask someone to check if they were doing the right thing? Oh, and send the photo while they were at it. The messaging traffic increased and the consultants, the ones on the receiving end of all these new messages, started to push back. They didn’t want to be consulted all the time, on problems they viewed as trivial. It turned out that the older pager system’s higher transaction costs (paging took effort) meant that only the most important things were getting paged. Now that the transaction costs were lower, there were more messages and so the consultants pushed back.

So ultimately, the new electronic system was not successful in the sense that it did not garner widespread adoption. Not because its underlying technical fundamentals were bad but because it simply was not a good fit for the dynamics of this particular workplace with its lower-paid residents and higher-paid consultants. Success depends on more factors than just the fundamentals of the technology.

How will LLMs impact human jobs?

This is not to say that LLMs will not have some disruptive consequences for human jobs; it’s to say that those consequences will depend on the products that get designed, the features they have, whether customers can afford those products, and whether those products can fit the institutional cultures of these customer organizations. Designing a good product is not simply downstream of some capability (i.e., “A.G.I.”); nor is a good product always a successful product.

You can see this in the trajectory of OpenAI itself. Originally conceived as a research organization whose goal was to create A.G.I. (or some sort of fundamental underlying technology), OpenAI first became famous for creating its GPT models. As someone who follows these things, I heard references to GPT-1 and GPT-2 all the time and I was aware that these were powerful models. But OpenAI’s real claim on fame was when it invented and released ChatGPT. But ChatGPT was a product, not a model; its success certainly depended on the quality of the GPT model; but making ChatGPT was more than just putting a chat interface around GPT-1 or GPT-2. It involved the engineers at OpenAI figuring out its interface; figuring out its implementation; eventually, it also involved them putting a lot of safeguards around it to prevent it from being prompt-hacked.

Today, OpenAI is arguably not a research organization anymore; it is trying to be (and almost is) a product organization, turning out products and competing with other companies with similar products. A recent piece in the Wall Street Journal, titled “Turning OpenAI Into a Real Business Is Tearing It Apart,” argued that it was this change that was behind OpenAI’s recent trouble with keeping its original staff. The author Deepa Seetharaman writes that:

Some at the company say such developments are needed for OpenAI to be financially viable, given the billions of dollars it costs to develop and operate AI models. And they argue AI needs to move beyond the lab and into the world to change people’s lives.

Others, including AI scientists who have been with the company for years, believe infusions of cash and the prospect of massive profits have corrupted OpenAI’s culture.

One thing nearly everyone agrees on is that maintaining a mission-focused research operation and a fast-growing business within the same organization has resulted in growing pains.

The article quotes an early OpenAI employee:

“It’s hard to do both at the same time—product-first culture is very different from research culture,” said Tim Shi, an early OpenAI employee who is now chief technology officer of AI startup Cresta. “You have to attract different kinds of talent. And maybe you’re building a different kind of company.”

Products based on LLMs will almost certainly be invented and these products can certainly upend different sectors and occupations. The aforementioned Deep Research is currently priced at something like 200 dollars a month, a steep amount for me, but perhaps not for other companies. It’s success depends not just on how its underlying technology works (and what does “underlying” mean anyway?) but whether customers can afford it and whether it gives them an output that matches what they would like to see. It also depends, in some sense, on how the internet changes in response to products like Deep Research which relies, to a large extent, on finding documents to parse. What if the quality of documents declines or what if internet companies paywall their servers to keep Deep Research out?

It would be much better for analysts who are interested in how LLMs are going to transform occupations to look at actual products that are invented and the extent of their adoption in different sectors. Will companies turn to LLM-based products to use in their call centers and customer service operations? Will lawyers and researchers and executives start using Deep Research and cut down on the number of lower-level employees who made such reports? Can their organizations even afford it? Will we perhaps get a separate Deep Research for particular fields, say, one for medicine and one for law? We don’t know yet but these products will almost certainly transform tasks that involve text processing in multiple fields. Will other vendors create specialized LLM products that allow scientists to do drug discovery? Would they be priced such that most scientists could afford them or only those at the richest organizations? Or would scientists write these products themselves? Many of these events will play out over the next 10 years or so and yes, some of these events can have far-reaching consequences for that particular sector. (There will almost certainly be national security products that use LLMs and those vendors will be selling their products to governments but I assume those products would not be as known to most regular people.)

Which is to say: yes, change is definitely coming. But it is not “downstream” of some fundamental A.G.I. capacity. It’s going to come from the kinds of products that companies come up with and how those products fit customer demands. The LLM-based future is going to be interesting.

PS: This post has gotten way too long but there is another kind of argument that AI people sometimes make that I don’t think is helpful. Sometimes, the argument is that we don’t have to build products; if we just build A.G.I., then it will just build those products (or something to that effect. But that doesn’t work. Even if there is something called A.G.I. and it can build products, those products are still subject to the same rules as products made by human teams; they have to work for customers and they have to fit changing conditions.

The Planet Money story is useful! I'm going to add it to this old tweet thread about why methods of communication should be more difficult to use (otherwise they all turn into spam): https://x.com/stuartbuck1/status/1584023466717548545